Diffusion-based Generation of Long and High-fidelity Stereoscopic 3D from Monocular Videos

Brief Introduction

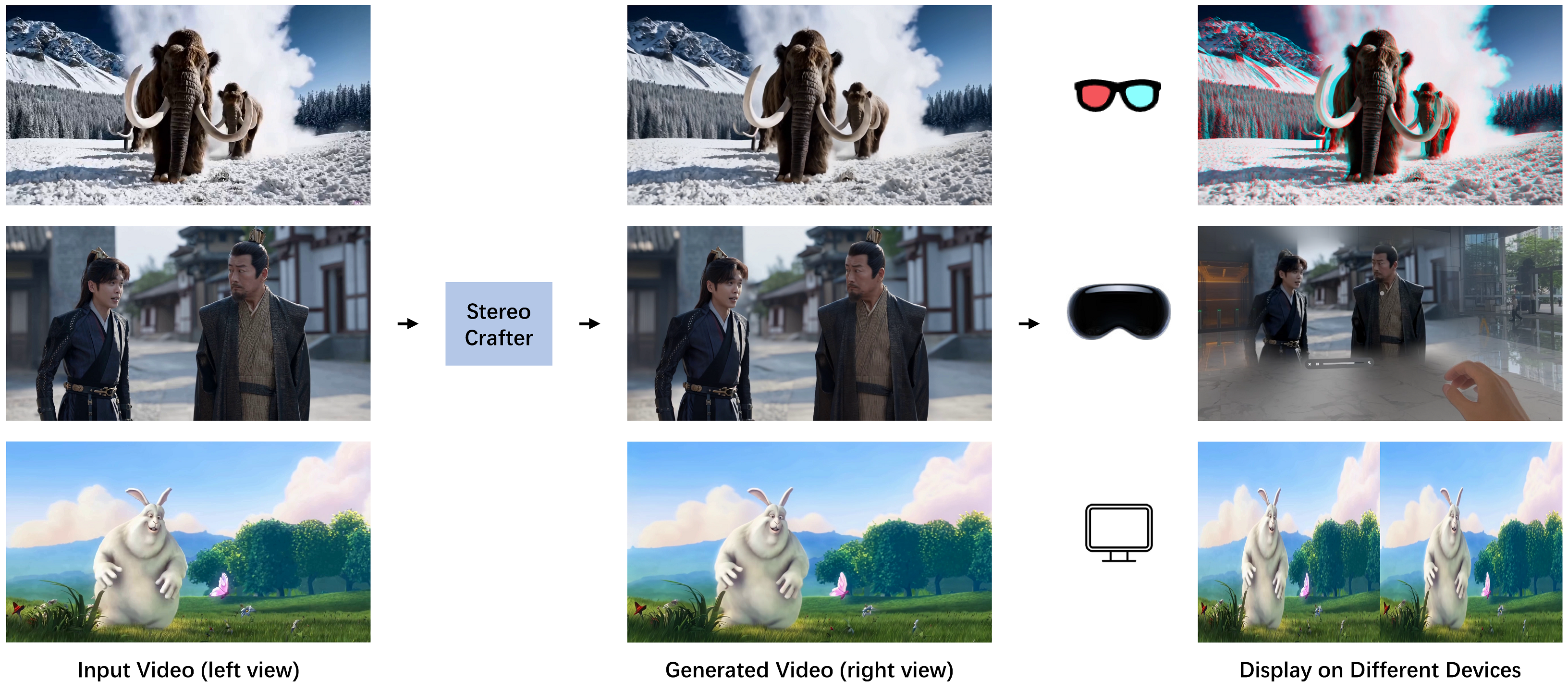

We present a novel framework for converting 2D videos to immersive stereoscopic 3D, addressing the growing demand for 3D content in immersive experience. Leveraging foundation models as priors, our approach overcomes the limitations of traditional methods and boosts the performance to ensure the high-fidelity generation required by the display devices. The proposed system consists of two main steps: depth-based video splatting for warping and extracting occlusion mask, and stereo video inpainting. We utilize pre-trained stable video diffusion as the backbone and introduce a fine-tuning protocol for the stereo video inpainting task. To handle input video with varying lengths and resolutions, we explore auto-regressive strategies and tiled processing. Finally, a sophisticated data processing pipeline has been developed to reconstruct a large-scale and high-quality dataset to support our training. Our framework demonstrates significant improvements in 2D-to-3D video conversion, offering a practical solution for creating immersive content for 3D devices like Apple Vision Pro and 3D displays. In summary, this work contributes to the field by presenting an effective method for generating high-quality stereoscopic videos from monocular input, potentially transforming how we experience digital media.

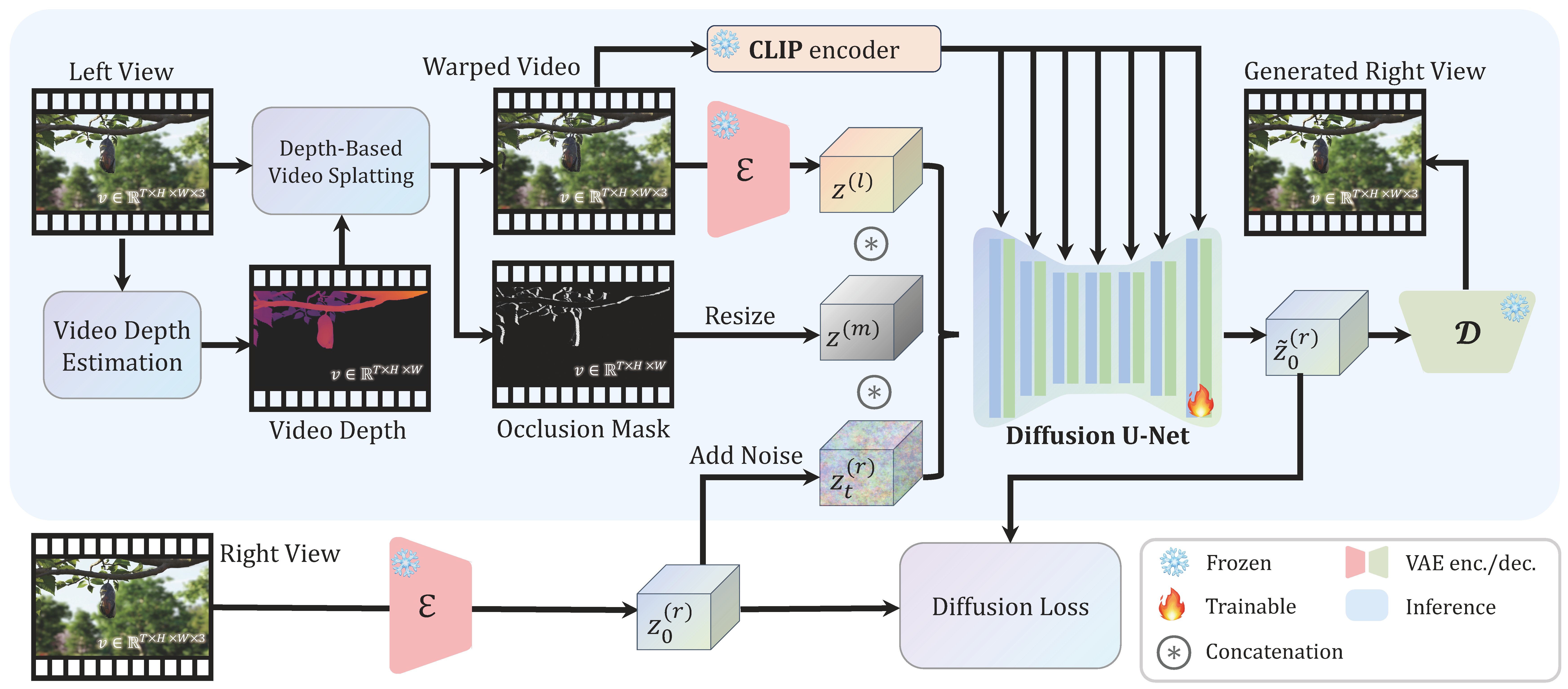

Overall Framework of StereoCrafter

Overall framework of StereoCrafter, which contains two main stages. In the first stage, the video depth is estimated from the monocular video and we obtain the warped video and its occlusion mask through depth-based video splatting with the left video and the video depth as input. Then, we train a stereo video inpainting model to fill in the hole region of the warped video according to the occlusion mask to synthesize the right video.

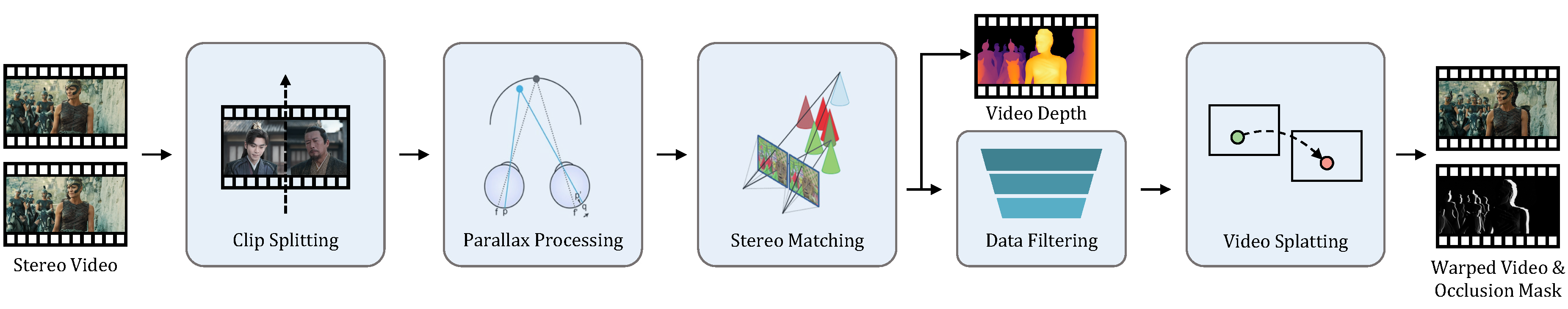

Dataset Construction

The pipeline of our approach for constructing the training dataset. After curating a large number of stereo videos, we generate the video depth/disparity, warped left video, and occlusion mask for each data sample, while using the right video as the ground truth.

More Demo: Free View Rendering from Monocular Video

[Click here to download High-Resolution Video ↓]More Demo: Watching the Generated Stereo Videos in Vision Pro

[Click here to download High-Resolution Video ↓]Citations

@article{zhao2024stereocrafter,

author = {Zhao, Sijie and Hu, Wenbo and Cun, Xiaodong and Zhang, Yong and Li, Xiaoyu and Kong, Zhe and Gao, Xiangjun and Niu, Muyao and Shan, Ying},

title = {StereoCrafter: Diffusion-based Generation of Long and High-fidelity Stereoscopic 3D from Monocular Videos},

journal = {arXiv preprint arXiv:2409.07447},

year = {2024}

}This page was built using the modification version of Academic Project Page Template from vinthony. You are free to borrow the of this website, we just ask that you link back to this page in the footer. This website is licensed under a Creative Commons Attribution-ShareAlike 4.0 International License.